Web frontend performance – distilled

Web performance used to be (in the good old server-only / server-rendering days) mainly dominated by the performance of your webservers delivering the dynamic content to the browser. Well, this changed quite a lot with application-like web frontends. Their main promise is to replace these annoying request/response pauses with one longer waiting period in the beginning of the session – but then have light-speed for subsequent requests.

Here are some really good links I just discovered today – they all deal with various aspects of frontend web-performance. Let’s start.

Comparing MV* frameworks? There is a great project – named TodoMVC – that compares various frontend-frameworks – amongst them are Backbone.js, AngularJS, Ember.js, KnockoutJS, Dojo, YUI, Agility.js, Knockback.js, CanJS, Maria, Polymer, React, Mithril, Ampersand, Flight, Vue.js, MarionetteJS, Vanilla JS, jQuery and a lot more.

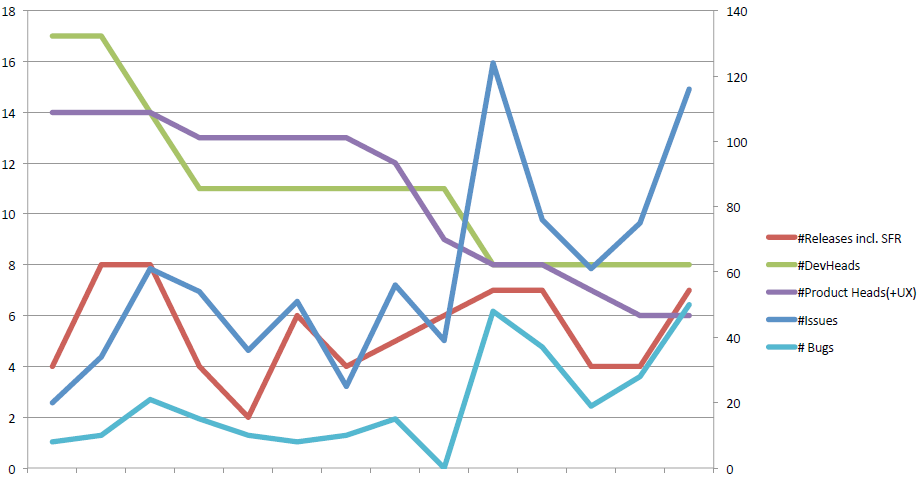

Performance impact comparison by the filament group. A good effort on research was spent on the topic “Research: Performance Impact of Popular JavaScript MVC Frameworks” – focusing on e.g. Angular.js, Backbone.js and Ember.js. Performance testing was done with the previous mentioned implementation of TodoMVC. The raw data is accessible as well. Most interesting are the results (measuring avg. first render time):

Mobile 3G connection on Nexus 5

- Ember averages about 5 seconds

- Angular averages about 4 seconds

- Backbone averages about 1 second

PC via LAN

- Ember averages about 1.17 seconds

- Angular averages about 0.88 seconds

- Backbone averages about 0.29 seconds

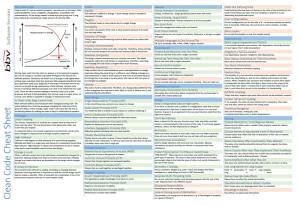

Practical hints to accelerate responsive designed websites. In his post “How we make RWD sites load fast as heck” Scott Jehl (@scottjehl) gives some pracitcal hints on what to focus on:

- Page wight isn’t the only measure; focus on perceived performance

- Shortening the critical path

- Going async

- Inlining code

- Shooting for 14kb

- Taking advantage of caches

- Using grunt in the deploy pipe

Angular 1.x and architecture problems. Another interesting blog article by Peter-Paul Koch (@ppk) focusses explicitly on Angular. “The problem with Angular” talks about severe performance problems with Angular 1.x versions. In his blog he notes

” … Angular 2.0, which would be a radical departure from 1.x. Angular users would have to re-code their sites in order to be able to deploy the new version …”

Wow. That’s interesting and a good indication for serious architecture issues with Angular 1.x …

Thought-leading companies and performance. Good articles / blog posts from leading companies on page speed performance: