Anil Cheriyan shared his thoughts on where to focus to create a fast and better working IT environment in the financial services industry – to speed up the organization (see: https://enterprisersproject.com/article/2017/6/suntrust-cios-formula-speed-relies-cloud-devops). In his post he mentioned 5 areas you should look after to break with old habits and start creating a fast pacing environment.

Anil Cheriyan is Director/Deputy Commissioner, Technology Transformation Services for the U.S. Federal Government. Previously, he was managing partner of Phase IV Ventures, a consulting and advisory firm.

Cloud

Two important aspects associated with the terminology “Cloud”. First it’s important to understand the implications of the various cloud strategies (ranging from private cloud over hybrid constructs towards public clouds). Get your strategy clear on which areas to host where. Criteria to look at are: business value provided, business continuity, resilience, security. Second aspect is the organization. Get your people involved. They need to participate in the strategy definition. They will execute them actually. No time for information hiding and bimodal IT infrastructures.

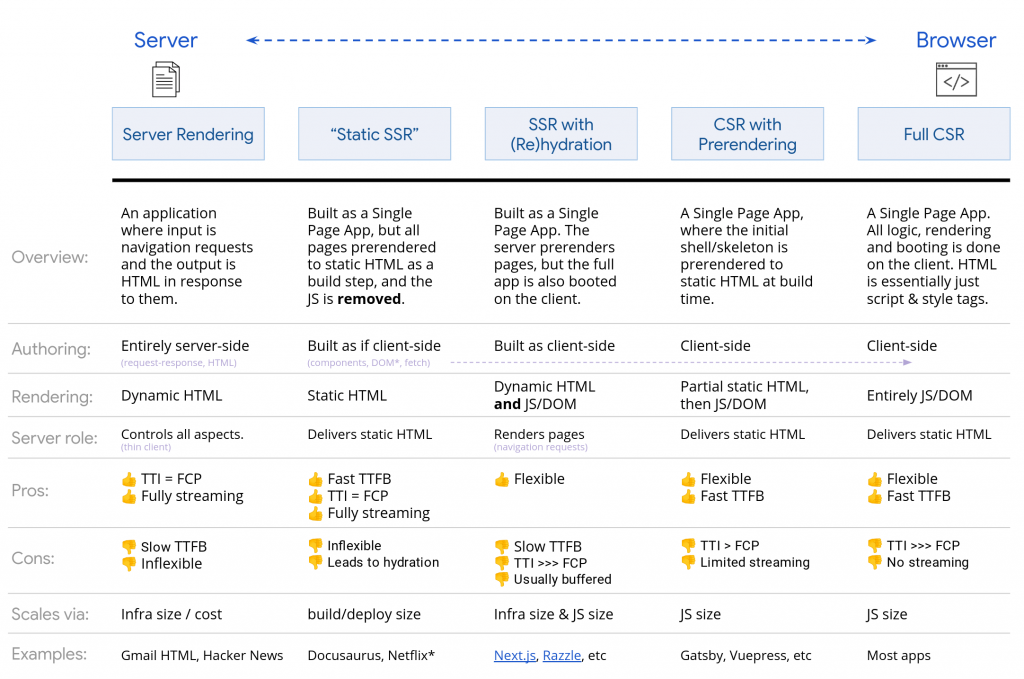

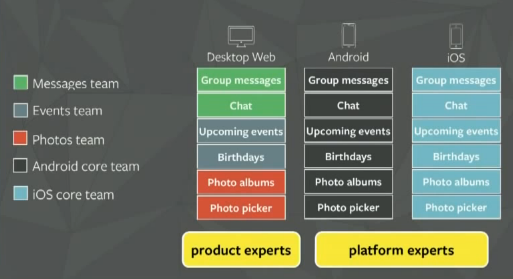

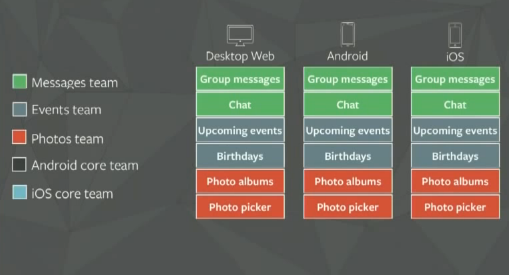

Modular architecture

Getting towards a modular architecture introduces flexibility in decisions, eliminates bottle necks and allows a decentralized governance. Today’s architectures are still monoliths or more advanced SOA stacks or somewhere in between. A more modular architecture exposes API’s via micro services. This architecture allows distributed ownership models. Complex is actually the implementation of these architecture rewrites. A lot of business related activities and the re-architecture work is a hard effort to combine.

DevOps

DevOps is finally all about the mindset of people and the break-up of silo-ed organizations. People need to learn and understand the importance of collaboration and trust. This sounds simple, turns out to be a heavy change project. Anil started pilot projects and introduced the true DevOps mindset and collaboration through success cases. It’s not about adopting rules and processes from the DevOps movement “by the books” – it’s about training your talent to work closer together.

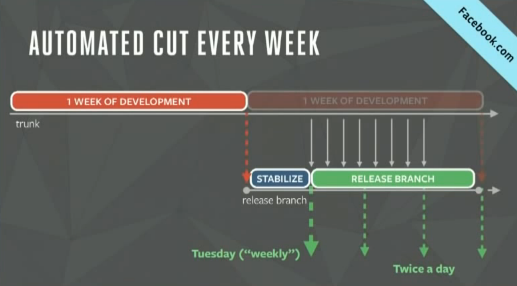

Agile development

Agile development in software development is quite wide spread and commonly used. The acceptance over waterfall models is – where appropriate – high. Issues occur if the agile software development processes get surrounded by traditional waterfall-oriented functions – control functions. The most challenging part is to get agility into release management, deployment and integration testing.

Design thinking

Most important aspect of design thinking is the customer centricity. Understanding the real problems of the user to be solved is at the core of the approach. Not hunting the 100% perfect solution with all nice and “useful” features. Going for the most valuable solution, ship it fast. This requires heavy re-thinking within the organization. It’s more about talent and collaboration models. Important is to get people together with a thorough understanding of the industry and processes to help solving the customer’s pain points.