Product Discovery Techniques

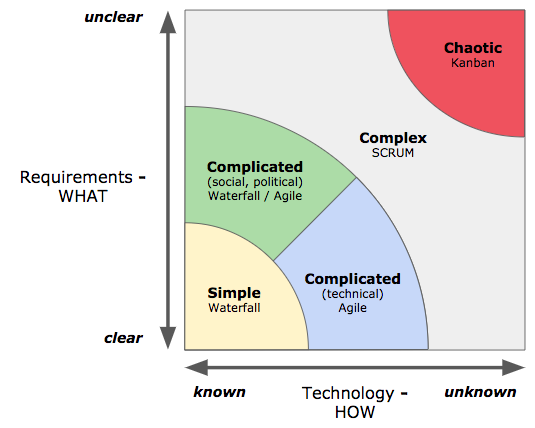

Don’t use these discovery techniques for bug fixes or optimization. They’re meant for real product discovery work, identifying and foremost validating big new product ideas.

1) FRAMING

Framing is the activity where the problem space is defined and the relevance of the problem at hand gets better understood. Do not spend too much time in framing.

See Marty’s blog for a more thorough description: https://svpg.com/assessing-product-opportunities/.

Opportunity Assessment

The Opportunity Assessment is enough 90% of the time.

- What business objective are you focused on?

typically one of the OKR objectives - How will you know if you have succeeded?

typically one of the OKR key results - What problem are you solving for our customer?

do you really know it’s a problem? - Who are you solving that problem for?

typically a target market or persona from the strategy

Internal Press Release

The Internal Press Release is not intended to go public – it’s for internal use only. The anticipated audience is new product’s customers – actually it’s the team, management and stakeholders. It’s typically 1.5 pages maximum and written in “oprah-speak”, not “geek-speak”. Sometimes, 3-4 pages of FAQ for anticipated questions are added. The structure of the internal press release looks like this: heading, summary, problem, benefits, quote from you, customer quote, closing / call to action

Amazon uses Internal Press Releases for big ideas / efforts (e.g. site redesign or moving into new country).

An alternative to the Internal Press Release is the Happy Customer Letter describing the benefits for a customer written by the customer and the CEO-letter describing benefits for the company.

Marty writes on his blog about the internal Press Release and the Customer Letter on his blog: https://svpg.com/the-customer-letter/

Lean Canvas

The Lean Canvas is ideally used for a new business unit, a business line or a startup.

The canvas is described at great detail at LeanStack: https://leanstack.com/leancanvas

An alternative to the Lean Canvas is the Opportunity-Solution-Tree. It is introduced by Teresa Torres at her Mind The Product Talk 2017 in London “Critical Thinking For Product Teams“.

Marty talks about the different application areas of the Lean Canvas vs. the Opportunity Assessment on his blog: https://svpg.com/lean-canvas-vs-opportunity-assessment/.

2) PLANNING

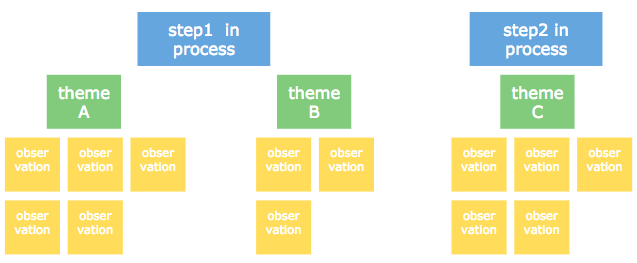

User Story Mapping

User Story Mapping helps to visualize and deconstruct the problem or solution space. It provides an holistic view and gives context. Through the collaborative process it encourages shared understanding, identifies holes in thinking and improves planning and estimates. Furthermore it heavily influences prototypes and actually helps to scope releases for the product.

More information can be found in the book „User Story Mapping“ by Jeff Patton: https://www.amazon.de/User-Story-Mapping-Discover-Product/dp/1491904909 or here “Design Thinking in a nutshell – what is it and what’s in for us?“

A very good example on how workiva used User Story Mapping can be found here “Interaction Design for Enterprise Teams” by Jason Moore on Slideshare.

Customer Discovery Programs

The basic idea of the Customer Discovery Program is to discover and develop a set of reference customers in parallel with discovering and delivering the product. At the stage where the reference customer signs up for the program there is no product ready to be delivered. The Customer Discovery activity makes only sense for real big efforts, absolutely not for features. Serious enterprise customers are very likely to sign-up because they’re burned by the practices of Oracle, SAP and the likes – sell and run.

The reference customer bought the product without any side deals, runs the product in production and loves it enough to tell the world about it. In Customer Discovery, we’re looking for “Earlyvangelists”. An Earlyvangelist is best characterized by these criterion:

- They have a problem.

- They understand they have a problem.

- They are actively searching for a solution.

- They have a budget allocated.

See also the definition of the Earlyvangelist at https://steveblank.com/2010/03/04/perfection-by-subtraction-the-minimum-feature-set/.

With the Customer Discovery Program it’s simple to tell if product / market-fit is reached: achieved if 6 reference customer for a single market segment (e.g. industry, geography, …) are found. The product / market-fit product is the smallest possible product that meets the needs of this group. If you find only 4 customers or less this means the product market fit is invalidated and a pivot is needed. Work with 5-6 companies – and not more than 8. Talk to as many as possible – e.g. 50. Select only the most attractive for the Discovery Program, the other customers go into the beta program. Agree with the selected companies to be a discovery partner and ensure the right level of access to people and input. Agree with them to become a public reference if they like the delivered product.

In Enterprise business the goal is to find a single product solution that fits all discovery partners. Again, it’s important that all partners are from one single market segment. In Consumer services you should identify 8-20 Earlyvangelists and agree with them on regular phone calls to synchronize.

Examples for customer discovery: OpenTable (SMB), Symantec (Enterprise), Bazaarvoie (B2B2C), xoopit (Platform Services), Apple (internal tools), BarkBox (Consumer Service)

Marty’s blog on reference customers: https://svpg.com/the-power-of-reference-customers/

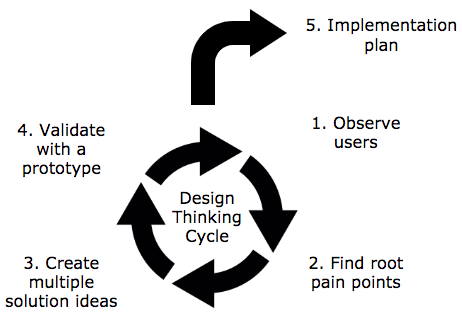

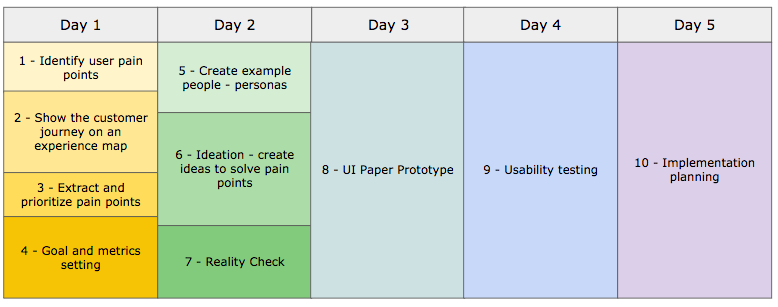

3. Ideation

Customer Interviews

Customer interviews are needed to understand your customer, to get rid of assumptions and start working with facts. Marty summarizes the value of interactions with customers in his post “Don’t talk to customers?“. Below are the key questions to answer:

- Are your customers who you think they are?

- Do they really have the problems you think?

- How does the customer solve this problem now?

- What would it take for them to switch?

Additional Ideation Techniques:

- Concierge Test (see: https://pdmethods.com/concierge-testing/)

- Public API’s (let others innovate on your product) – be aware of bad usage: Cambridge Analytica + Facebook

- Hack Days (directed and undirected)

- Data Spelunking (Hackathon on data)

4. Prototyping

The prototype should minimize the time by factor 10 to provide something to look at. See more on prototypes at Marty’s blog “Flavors of Prototypes“.

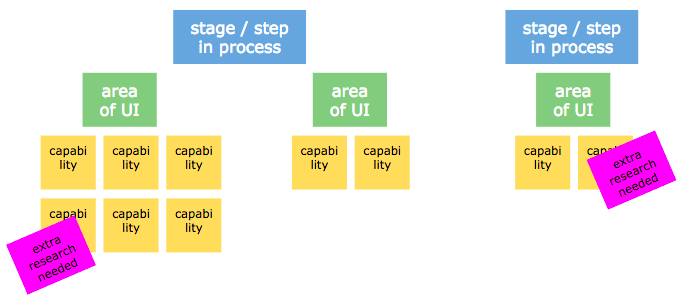

User Prototypes

User prototypes are created very fast and lightweight by nature. The prototypes are used for value testing with a consumer or customer to quickly gather feedback on both, usability and value. Low fidelity user prototypes are used for team internal iterations. Use high fidelity prototypes to show-case internally to executive people. The prototype is usually created by the Product Designer with support from the Product Manager. Ideally, when finished, the prototype could be used as a specification for the Delivery process – “prototype-as-spec”.

Paper prototypes are too limited by nature, use wireframing tools (e.g. balsamiq, axureRP, proto.io, FLINTO, UXPin, marvel, invision, Adobe XD) instead. They allow interactions with the prototype and are no more effort to build.

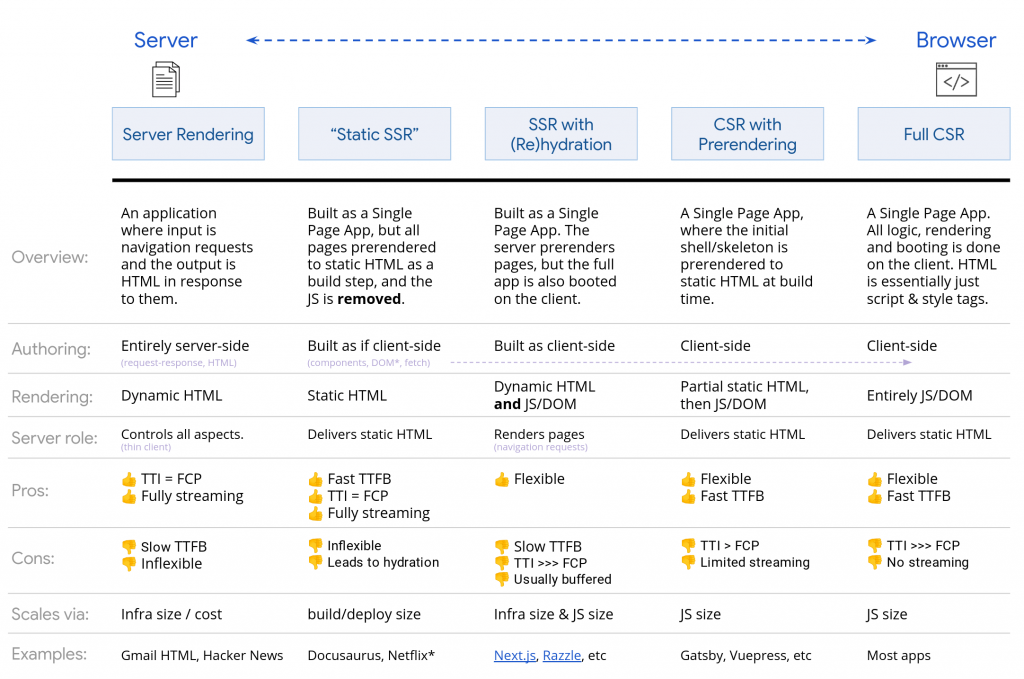

Feasibility Prototypes

The feasibility prototype validates the solution approach. Usually, the prototype is build by engineering to gain further insights on the implementation and test technical (e.g. scalability, performance) risks. The prototype might not be more than a code fragment or a successful validation of a 3rd party software or API integration. It may also happen that product people are not involved in the prototype at all.

Live Data Prototypes

The purpose of the live data prototype is to collect further evidence pro or contra a product decision. This prototype is more expensive to build than the user prototype, but still far less than the actual product. The prototype is not the real product, it’s usually 5-10% of the real product. It includes quantitative a/b-testing but also qualitative testing and is based on real data. A small amount of real traffic could land on the prototype to collect data. Engineering is typically needed to create the live data prototype within 2 days up to 2 weeks.

A lot of people get excited when they see the live data prototype and tend to confuse the prototype with the real product. But there’s still a significant difference between production ready software and the live data prototype. The real product needs:

- All required use cases

- Instrumentation / analytics

- Test automation

- Scale and performance

- SEO work

- Maintainability

- Internationalization / localization

A good example of a live data prototype is Amazon’s “Frequently bought together” feature. The idea of building the features was rejected by SVP Marketing. So, strong evidence was needed because it was simply too expensive and risky to productize the feature without further evidence. So, the team decided to build a live data prototype with a small amount of real traffic in a specific product category. The prototype was a/b-tested and the collected data provided a significant uplift in business KPI’s. This is a great example of a high-integrity business case.

Hybrid Prototypes

Hybrid prototypes mix those elements needed to tackle the specific risks at hand. They blend various techniques and are mainly limited by your own creativity.

A good example of an hybrid prototype is Zappos. Zappos solved the problem of female shoppers to buy fashion shoes online. They defined and understood their personas and their key problems with shopping online: 1) returning goods 2) no timely delivery 3) not knowing the size 4) bad product images. Zappos prototyped a potential solution to the persona’s problems by mixing a variety of prototypes: user prototype (appealing front-end), live data prototype (product catalogue and images) and the “Wizard of Oz” (buying the shoes at the shop over the street and delivering to customer). Most important was: the users shouldn’t recognize the prototype character of the solution. Zappos controlled the amount of traffic via AdWords and made sure they could handle the manual part. So, with this mixture of prototypes – that for sure doesn’t scale – they could validate demand, value and usability.

Testing Product Ideas

“Prototype as if you know you’re right, but test as if you know you’re wrong.”

d.school

Marty writes in his blog about “Prototype Testing” more detailed on the various ways to testing.

5. Testing Usability

Usability testing includes interacting with customers, getting their feedback. For the test session, have the prototype ready – up and running and focus on the prepared questions to understand if users have issues using your product. The session may be conducted at your office, the customer’s office or at a mutual convenient location (e.g. Starbucks) or – if not possible – remote via video conference.

Recruiting users in B2B context is done via the customer discovery program, in B2C via AdWords. AdWords allow acquisition of users based on keywords and / or geo-targeting. It’s the most cost-effective solution and easy to stop and restart again. Payment is entirely based on performance.

6. Testing Value

Testing value focuses on three aspects: testing demand (is this really a problem?), testing efficacy (how well does the product solve the problem?) and testing response (how excited are the testers?).

Testing Demand

Testing demand answers the question if people are willing to use the product, if they understand the value and see the product solving a real problem they have. Marty talks more about Desirability Testing on his blog about “Product Validation“. Some techniques for demand testing are described below

Fake Door Test

A fake door test fakes a product feature for the customer. If the customer acts with the fake product a thank-you-message is displayed and sometimes contact information is collected. Furthermore, nothing happens. The goal of the test is to collect data, to measure the click-thru ratio. More information on the fake door test can be found here: http://learningloop.io/plays/fake-door-testing.

Landing Page Test

A landing page test pitches product features, products, product lines or other promises to the customer combined with an explicit call to action. Push traffic on the landing page via e.g. AdWords or other comparable methods. Now, measure the conversion – how many people do actually interact with the landing page and are interested enough to follow the call to action? With the click, nothing happens with the customer other than a friendly thank you message and sometimes the question for contact details. More information on the landing page test can be found here: http://learningloop.io/plays/spoof-landing-pages

Explainer Video

The explainer video shows a high fidelity prototype at work. It’s basically a video of a product demo. It is then distributed and measured like the landing page test above. The goal, again, is to measure demand for the demoed product. More on the explainer video: http://learningloop.io/plays/video-demo

Kickstarter Testing

A great way to test a product idea without jeopardizing your brand is to test demand on kickstarter.com. Just place the product idea at the crowdfunding platform as a “nobody”. If the idea creates enough buzz it’s worthwhile a further investment, if not it can be dropped silently without creating any noise. Read more on the idea from Mark Dwight “How to Kickstart Your Market – Why even established companies can use crowdfunding.“

Qualitative Value Testing

“Find everything that’s wrong with the product and fix it; Seek negative feedback.”

Elon Musk

Qualitative testing explains why it’s working or not, it gives insight why something is happening or isn’t. It doesn’t try to prove anything. You won’t get the answer from any one user test; every single test provides another piece of the puzzle. It’s important to test with real users and customers to judge the value.

Qualitative value testing is done with prototypes or the real product. It provides insights from usability and value perspective. On top it usually provides unexpected insights from the customer. It’s typically done fast and cheap. To really understand how much customers value the product, various questions or tests can be conducted:

- Payment – will they pay for it?

credit card information, pre-order form, letter of intent (in B2B) - Reputation – will they recommend it?

NPS, introduction to peers or the boss, public reference - Time – will they meet again? Will they invest their time?

agreement on follow-up meeting, non-trivial trial - Behavior – will they switch from their current solution?

Quantitative Value Testing

“Features are not inherently valuable. The value for our customers is only realized when a feature fulfills a need. It’s only realized for our business when we see the results of our work move the needle. That’s why we need to concentrate on the outcomes over the outputs.”

Melissa Perri (see: https://melissaperri.com/)

Quantitative value testing can provide evidence or even proof that something truly works – or isn’t. It generally can not explain why it’s so. It’s done to get a clearer picture on the impact on your revenue, your brand, your customers and also your employees.

Quantitative testing can be done with the existing product – or a live-data prototype – in an A/B testing setup on a certain amount of your traffic. Alternatively, it could be done with a limited amount of your customer through invitation. In a B2B scenario, you’d use your existing customer relation via the Customer Discovery Program to get exposure of the test to people.

A good example for a quantitative value test is Spotify’s “Discover Weekly” feature. Data collected in an A/B test was compelling enough to fully implement the feature. The launch-ready implementation included some big hurdles and a lot of effort on the data crunching side. So, it was well worth the effort to test – before – putting the feature in Delivery.

7. Testing Feasibility

Testing feasibility mainly addresses technical concerns – are we able to build this at all? To test feasibility it’s important to create prototypes that focus on the key areas of concern. Emphasize speed of learning over reuse of the written code. Your Tech people need to answer these questions:

- Do we know how to build this?

- Do we have the skills on the team?

- Do we have enough time?

- Do we have the right architecture or components?

- Do we understand the dependencies?

- Will the performance and scale meet our needs?

- Do we have the infrastructure to test and run this?

8. Testing Business Viability

Business viability – does this feature / product fit with our business? – needs to be addressed to be successful within the own organization. Your stakeholders (e.g. Senior Executives, Sales, Marketing, Finance, Legal, Security, Business Operations, …) need to be informed regularly, you need to earn their trust. Have discussions with them and make them feel you understand them – but remember: everyone has a voice, but not a vote! Try to engage individually with them, group meetings can cause a lot of damage and are harder to handle. When talking to your stakeholder, have your data ready – data always beats opinions. And read the signs during the meeting – differentiate between stop signs and yield signs.

Techniques you can use for a stakeholder meeting is typically a high fidelity user prototype and / or a product walkthrough.

This blog post is part of a series. It summarizes my personal notes of the workshop held by Marty Cagan “How to Create Tech Products Customers Love” from 5th to 6th of June in 2019 in San Francisco.

- #1 Foreword

- #2 Introduction & Root Causes of Product Failures

- #3 Key Terms & Concepts

- #4 Product Teams & Product People

- #5 Product Vision & Objectives

- #6 Product Analytics

- #7 Product at Scale

- #8 Product Development Process

- #9 Product Discovery Principles

- #10 Product Discovery Techniques

- #11 Product Culture & Transformation